Industry leaders from ARM, Intel, Nvidia, AMD are saying there are gaps in verification metrics. ANN STEFFORA MUTSCHLER of Semiconductor engineering compiled panel discussion held during DAC and wrote this great article about “Gaps In Verification Metrics”. Theme of panel discussion was, whether conventional verification metrics are running out of steam?

Following are some key comments from industry leaders.

Alan hunter senior principal engineer from ARM also shared about a new metric called statistical coverage and its benefits.

Maruthy Vedam, senior director of engineering in the System integration and Validation group at Intel, indicated verification metrics play huge role in maintaining quality.

Anshuman Nadkarni who manages the CPU and Tegra SOC verification teams at Nvidia asserted, “Metrics is one area where the EDA industry has fallen short”.

Farhan Rahman chief engineer for augmented reality at AMD says, “The traditional metrics have their place. But what we need to do is augment with big data and machine learning algorithms”.

What do verification teams think about it?

Sounds interesting. Where we are, even getting code and functional coverage to 100 % itself is a challenge, given the schedule constraints. Improvements and additional coverage metrics would be nice, but sounds like lot of overhead on already time-crunched design and verification folks.

Industry leaders do feel there is gap in verification metrics. Addressing them by enhancing and adding new metrics makes it overwhelming for verification teams. How do we solve this paradox? Before we get to that, you might be wondering, how do we know it?

How do we know it?

If you care to know, here is a short context.

How to improve functional verification quality? We started looking for answers to this question two years ago. We started exploring it from three angles: quality by design, quality issue diagnosis and quality boost.

When we shared it with verification community, they said, here is the deal, what’s done is done; we cannot do any major surgery to our test benches. However if you can show us the possible coverage holes with our existing test bench we are open to explore.

We found that most verification teams doing a good job of closing code coverage and requirements driven black box functional coverage. However white box functional coverage was at the mercy of designer. Designers did address some of it but their focus wasn’t much from verification point of view but to cover their own assumptions and fears.

So we started building automation for generating white box functional coverage to quickly discover the coverage holes. As we analyzed further, we found functional coverage alone was not enough to figure out if the stimulus did a good job on microarchitecture coverage. It wasn’t giving clear idea about did it exercise something sufficiently or whether the relative distributions of stimulus across features was well balanced. So we added statistical coverage. Based on the high-level micro-architecture we started generating both functional and statistical coverage.

As it started giving some interesting results, we went back to verification folks to show them and understand what they thought about such capabilities.

Very candid response from them was getting code and functional coverage to 100 % itself is a challenge, given the schedule constraints. This additional coverage metric is nice, but sounds like lot of overhead on already time-crunched design and verification folks. Some of the teams are struggling even to close the code coverage before tape out leave alone functional coverage.

Why are verification teams overloaded?

Today’s verification engineers not only worry about functionality, power and performance but also have to worry about security and safety. Verification planning, building verification environments, developing test suites, handling constant in flux of changing features, changing priorities, debugging the regression failures, keeping the regressions healthy, managing multiple test benches for variations of designs are all challenging and effort intensive.

Result of this complexity has also to lead to most of the constrained random test benches to be either overworking or underworking. Conventional metrics fail to detect it.

We went to verification managers to understand their views. Verification managers are on the clock to help ship the products. They have to face the market dynamics that are best defined as VUCA (Volatile, Uncertain, Complex and Ambiguous). That pushes them to be in highly reactive state for most of the time. Coping with it is definitely stressful.

Under such conditions they feel its wise to choose the low risk path that has worked well in the past and has delivered the results. They don’t desire to burden their verification teams further without guaranteed ROI.

We started to brainstorm to figure out how to make it work and deliver the benefits of white box functional coverage and statistical coverage generation without causing lot of churn.

Its clear there are gaps in the verification metrics. It’s clear the field of data science is advancing and it’s only wise for verification world to embrace and benefit from it. How do we reduce the effort of verification teams to help them gain the benefits from new developments?

Should we cut down on some of existing metrics?

First option to consider is, should we cut down on some existing metrics to adopt new metrics? We still need code coverage. Definitely we need the requirements driven black box coverage. Ignoring the white box functional coverage is same as leaving the bugs on table. Statistical coverage can help you discover bugs that you might end up finding only in emulation or even worse in silicon. We need all of them.

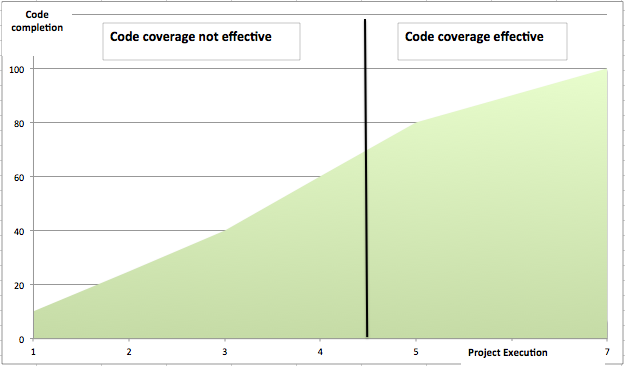

However one mindset change we can bring in is not waiting for 100% code coverage before starting to look at other coverage metrics. You need an act of balancing to choose and prioritize the coverage holes among various metrics that have highest bang per buck at every step of functional verification closure.

Now expecting this analysis and prioritisation from verification team is definitely overkill.

How can we benefit from new metrics without overloading verification teams?

We need to add new role to composition of successful verification engineering teams.

We are calling it as “Verification analyst”.

Verification analyst will engage with verification data to generate insights to help achieve the highest possible verification quality within the given cost and time.

Stop, I can hear you. You are thinking adding this new role is additional cost. Please think about the investment you have made in tool licenses for different technologies, engineering resources around them and compute farms to cater to all of it. When you compare to the benefits of making it all work optimally to deliver best possible verification quality, the additional cost of adding this new role will be insignificant.

Bottom line, we can only optimise for two out of three among quality, time and budget. So choose wisely.

Whether we like it or not the “data” is going to center of our world. Verification world is going to be no exception. Its generating lots of data in the forms coverage, bugs, regressions data, code check-ins etc. Instead of rejecting the data as too much information (TMI) we need to put it to work.

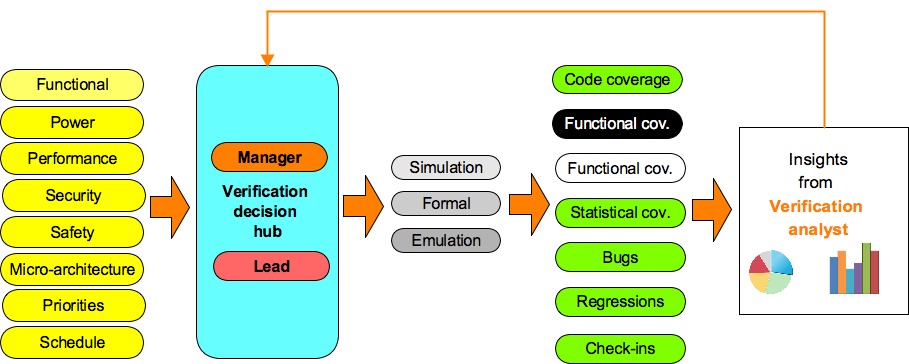

Most of the companies are employing simulation, emulation and formal verification to meet their verification goals. We don’t have metric driven way to prove which approach is best suited for meeting current coverage goals. That’s where the verification analyst will help us do verification smartly driven by metrics.

Figure: Role of verification analyst

Role: Verification Analyst

Objective:

Verification analyst’s primary responsibility is to analyze verification data from various sources using analytics techniques to provide insights for optimizing the verification execution to achieve the highest possible quality within the constraints of cost, resources and time.

Job description:

- Ability to generate and understand the different metrics provided by different tools across simulation, emulation and formal

- Good grasp of data analytics techniques using Excel, Python pandas package, data analytics tools like Tableau or Power BI

- Build black box, white box functional and statistical coverage models to qualify the verification quality

- Collect and analyze various metrics about verification quality

- Code coverage

- Requirements driven black box functional and statistical coverage

- Micro-architecture driven white box functional coverage and statistical coverage

- Performance metrics

- Safety metrics

- Work with the verification team to help them understand gaps and draw the plan of action to fill the coverage holes as well as hung the bugs using the right verification technology suitable for the problem by doing some of the following

- Making sure the constrained random suite is aligned to current project priorities using the statistical coverage.

- Example: If design can work in N configurations and only M of them are important, make sure the M configurations are getting major chunk of simulation time. This can keep changing so keep adapting

- Understanding the micro-architecture and making sure the stimulus is doing what matters to the design. Use the known micro-architecture elements to gain such insights.

- Example: Did FIFO go through sufficient full and empty cycles, Minimum and maximum full durations, Did first request on the arbiter come from all of the requesters, Relative distribution of number of requesters active for arbiter across all the tests, number of clock gating events etc.

- Identify the critical parts of the design by working with the designer and make sure it’s sufficiently exercised by setting up custom coverage models around it.

- Example: Behavior after overflow in different states, combination of packet size aligned to internal buffer allocation, Timeouts and events leading to timeout taking place with together or +/- 1 clock cycle etc.

- Plan and manage regressions as campaigns by rotating the focus around different areas. Idea here is to tune the constraints, seeds and increase or decrease the depth of coverage requirements on specific design or functional areas based on various discoveries made during execution (bugs found/fixed, new features introduced, refactoring etc.) instead of just blindly running the regressions every time. Some of examples of such variations can be:

- Reverse the distributions defined in the constraints

- If there are some major low power logic updates then increase seeds for low power and reduce it for other tests with appropriate metrics to qualify that it has had intended effect

- Regressions with all the clock domain crossings (CDC) in extreme fast to slow and slow to fast combinations

- Regressions with only packets of maximum or minimum size

- Regressions for creating different levels of overlaps of combinations of active features for varied inter-feature interaction. Qualify their occurrence with the minimum and maximum overlap metrics

- Map the coverage metrics to various project milestones so that overall coverage closure does not become nightmare at the end

- Map the coverage holes to directed tests, constrained random tests, formal verification or emulation or low power simulations

- Analyze the check-ins, bugs statistics to correlate highest buggy modules or the test bench components and identify the actions to increase focus and coverage for them or identify module ideal for bug hunting with formal

- Making sure the constrained random suite is aligned to current project priorities using the statistical coverage.

- Promote the reusable coverage models across teams to bring the standardization of the quality assessment methods