Verification strategy

We often hear about verification strategy. The word strategy may make it sound more business like and may seem to alien engineers away from it….

We often hear about verification strategy. The word strategy may make it sound more business like and may seem to alien engineers away from it. That’s how many projects end up without clear definition of verification strategy. This leads to a bad start.

Dictionary meaning of strategy is “a plan of action designed to achieve a long-term or overall aim”. If you google and see this word usage, it has only grown over period of time. It means strategy is becoming increasingly important to all areas of work and not just business problems.

Functional verification never ends. Its objective is to reduce risk of design usage for given applications. That’s exactly the reason why verification strategy becomes important in meeting the overall verification objective in optimal way.

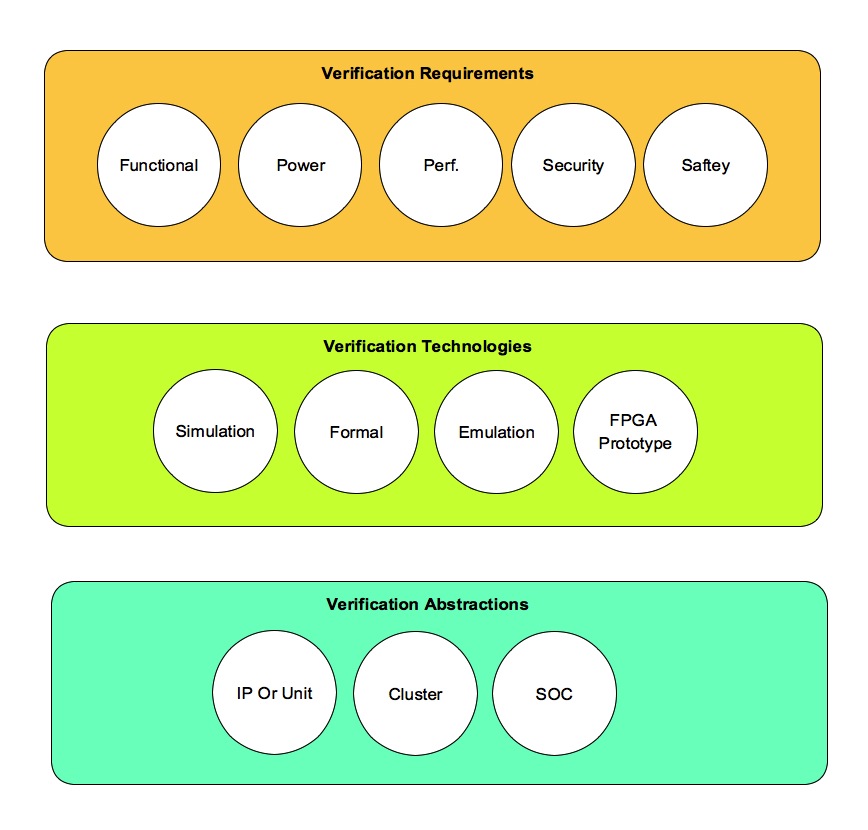

Verification strategy is plan for meeting verification requirements using right verification technologies at right abstractions.

Following sections will define and explain what is meant by verification requirements, verification technologies and verification abstractions. Depending on the phase of development, different verification strategy can work out better.

Verification requirements

Verification requirements for current state of art designs are:

- Functional requirements

- Power requirements

- Performance requirements

- Safety requirements

- Security requirements

Beyond basic functional requirements, Power, performance, security and safety are new dimensions added in the scope of functional verification.

Power requirements meeting requires additional logic such as clock gating, multiple power islands, turning the power islands on/off dynamically and frequency & voltage scaling. Verification of this additional logic require both the static and dynamic simulations.

Dynamic simulation involves running selected low power scenario tests in power aware simulators. The selected tests are marked in the test plan. Prior to running these tests in power aware simulators, additional information about the low power design intent has to be provided in format understood by power aware simulators. Current popular format for describing power details is universal power format (UPF).

Performance verification for simple designs can be running few specialized tests from the functional verification test list and checking bandwidth & latency numbers reported by verification components of testbench.

For complex designs it may require specialized performance monitors. These monitors could monitor external interfaces, internal nodes or both for specialized events to gain insights in to performance of the design. Additional specialized tools are designed around monitors which could post process the data from monitors to check if the performance targets are met and also allow the insights for debugging the performance failures.

Safety verification at first level demands the higher quality of verification. This is achieved by ensuring more complete and correct verification. To achieve the completeness of verification, traceable verification plans play a vital role. In traceable verification plan requirements, features, implementation and results are strongly tied together. To improve the safety of the designs additional error detection and correction structures are added at critical internal nodes within the design. It could be FIFO interfaces or internal buses additional protection is added. This requires additional verification through assertions and specialized test cases.

Security could be external interfaces level, internal hardware backdoors or software applications level. Software application security in the context of hardware support is addressed through the hardware firewalls through the set of access policies for accessing the devices. Secure device access policies verification can require additional test cases and modification to existing test cases. Internal hardware backdoors and external interface attacks requires help of formal verification to formally prove such unauthorized access paths do not exist.

Verification technologies

Verification technologies offerings we have are:

- Simulation

- Emulation

- FPGA prototyping

- Formal

Simulation based verification has primary benefit of controllability and observability. One may ask question, why not meet all my verification goals with simulation alone? Sure if you have infinite time and resources. Considering the shorter time-to-market cycle some level parallelism in execution of the verification task is desired. It may involve certain level of redundancy and additional cost associated with it. Idea is to use right tool for problem at hand and reduce time to catch showstopper bugs.

Simulation based verification is hitting the speed limits for current design sizes. Emulation in the form of testbench acceleration is seeing traction and wider adoption. Emulation might stay limited to certain type of designs due to availability bus functional models in hardware also called as transactor. It will improve as adoption grows. Transactor will also have limited functionality. They will not be able to completely match the simulation models. Not everything can be verification in emulation. For example, certain error injection type of scenarios will still have to be verified in the simulations.

Emulation although provides greater speed compared to simulation but still on lower side compared to FPGA prototyping. FPGA prototyping is application specific while emulation provides a generic platform. FPGA prototype requires creating FPGA based boards. Debugging on the FPGA based platform is another challenge. But it provides far higher clock speeds compared to emulation.

In both the emulation and FPGA prototyping, typically DUT is modified to make it fit, such as internal memory reduction, bus widths reduction, specialized features disabling etc. This means it’s still in simulations, where the DUT remains in its avatar resembling closest to its final usage form.

These are certain class of problems, which are well suited for the formal verification using assertions. They are described below:

- Certain features, which require formally proving under all the scenarios certain conditions will not occur or occur. Examples are deadlock or cache coherence type of problems

- Certain regular structures where simple combinations of functionalities explode such as interrupt generation after through several enables and masks

- Computationally intensive logic which could be represented through a mathematical expressions/algorithms

Verification abstractions

Verification abstractions are fundamentally designed around divide and conquer approach

- IP or Unit level verification

- Sub-system or cluster level verification

- SOC level verification

Even within the IP or unit level verification “divide and conquer” approach may have to be employed to achieve the verification goals depending on its design being created for first time or has it been in production for some time. divide and conquer at unit or IP level in simulation will manifest in the form of multiple testbenches.

If a complex IP is built from group up, creating multiple testbenches might be good idea. Divide and conquer will work out better. Allowing RTL to be verified as and when it starts pouring in. But as time progresses and sub-units stabilize effort will have to be made to consolidate the testbenches. More than testbench creation it’s the testbench maintenance that costs more.

The risk is assessed differently for IP that is on its maiden voyage versus the ones that has been there and done that. If the IP is taped out and functioning in couple of chips, risk is reduced. Reduces risk presents an opportunity for consolidation of testbenches for that IP. Consolidation does mean some cases might get eliminated. As the chip matures its application usage becomes clearer. It allows reduction of any additional use cases imagined, which are no longer relevant.

Certain IPs also contains the microcontroller to handle the programmability. These IPs have additional challenge to cover the firmware. Now the question is do we use the firmware for all the test cases? This can reduce the controllability and observability apart from slowing down the simulations. This means the bulk of tests will have to be managed by abstracting out the firmware. There are two approaches possible.

First approach is, instead of using production firmware use simple test snippet based firmware. This firmware is simple programs mimicking part of the production firmware behavior for verifying a particular scenario. Although this approach reduces dependency on usage of production firmware but still simulation speed continues to be a challenge. Maintaining the test firmware snippets will be additional effort. Debugging still continues to be complex due to software and hardware co-simulation.

Second approach is, replacing the micro-controller with the bus functional model. Abstracting the firmware to transaction level behavior relevant for the hardware. This approach provides the higher simulation speed and ease of debug as well compared to using the test firmware or production firmware approach. Additional effort has to be invested in building the micro-controllers bus functional model and transaction model of the firmware. This approach also provides the highest level of controllability and observability compared to using test firmware or production firmware.

Using either first approach or second approach does not eliminate the need for running few use case tests with the production firmware.

When the total number of the IPs increase at the SOC there is additional level of abstraction of sub-system or clusters is introduced. This is an intermediate level between the IP and SOC level verification. Idea here is organize SOC as group of IPs rather than individual IPs hanging on backbone bus. IPs can be grouped based on different criteria’s. These could be driven by the use case of the SOC. Performance and power is primary factor driving the grouping of IPs. Soon the security and safety will also play a role. Cluster itself is treated as IP and/or small SOC and similar principles are applied.

While complete coverage is focus at the unit or IP level verification, the focus on the cluster or SOC level shifts to connectivity and use case based verification. Connectivity verification could be significantly aided by the semi formal verification techniques. Hardware and software co-simulation is inevitable at SOC level. That’s exactly the reason, emulation and FPGA prototyping aids significantly for the SOC verification.

Conclusion

Verification strategy has to take into account verification requirements, verification technologies and verification abstraction to figure out the optimal solution to closure verification objectives. It’s increasingly important to put in verification strategy and adapt it based on execution.