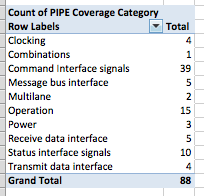

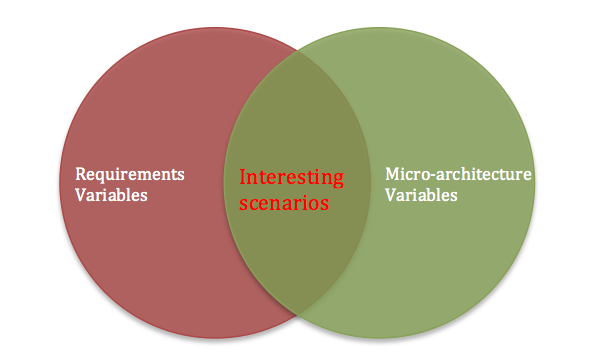

Functional coverage plan in order to be effective has to take into consideration two specifications. They are:

- Requirements specifications

- Micro-architecture implementation specifications

In principle verification teams readily agrees to above. But when it comes to defining the coverage plan it does not reflect.

In general functional coverage itself receives less attention. On the top of that among the above two, requirements specifications coverage ends up getting lion share. Micro-architecture implementation coverage gets a very little attention or almost ignored.

For some it may look like an issue out of nowhere. They may argue, as long as requirements specifications are covered through functional coverage, micro-architecture coverage should be taken care by code coverage.

Why do we need functional coverage for micro-architecture?

We need functional coverage for micro-architecture specifications as well because interesting things happen at the intersection of requirements specification variables and micro-architecture implementation variables.

Many of the tough bugs are hidden at this intersection and are caught very late in verification flow or worse in silicon due to above thought process.

How? Let’s look at some examples.

Example#1

Design with pipeline, combinations of states across stages pipelines is an important coverage metric for the quality of stimulus. Just the interface level stimulus of all types of inputs, will not be able to provide idea about whether all interesting states combinations are exercised for the pipeline.

Example#2

Design with series of FIFOs in the data paths, combinations of FIFO back pressures taking place at different points and with different combinations is interesting to cover. Don’t wait for stimulus delays to uncover it.

Example#3

Design implementing scatter gather lists for communication protocol, not only the random size of the packets are important but the packet sizes colliding with the internal buffer allocation sizes is very important.

For example let’s say standard communication protocol allows maximum payload up to 1 KB. Internally design is managing buffers in multiples of 256 bytes then multiple packets of size less than or equal to 256 bytes or multiples of 256 bytes are especially interesting to this implementation.

Note here from protocol point of view this scenario is of same importance as any random combinations of the sizes. If the design changes the buffer management to 512 bytes the interesting packet size combinations changes again. One can argue constrained random will hit it. Sure it may but probabilistic nature can make it miss as well. If it misses its expensive miss.

Covering and bit of tuning constraints based on the internal micro-architecture can go long way in helping find issues faster. Note this does not mean not to exercise other sizes but pay attention to sensitive sizes because there is higher likelihood of hard issues hiding there.

This interesting intersection is mine of bugs but often ignored in the functional coverage plan due to following three myths.

Myth#1: Verification engineers should not look into design

There is this big taboo that verification engineers should not look into the design. Idea behind this age-old myth is to prevent verification engineers from limiting the scope of verification or getting biased from design.

This forces verification engineers to focus only on the requirements specification and ignore the micro-architecture details. But when tests fail as part of debug process they would look in to the design anyway.

Myth#2: Code coverage is sufficient

Many would say code coverage is sufficient for covering micro-architecture specification. It does but it does it only partially.

Remember code coverage is limited as it does not have notion of time. So concurrent occurrence and sequences will not be addressed by the code coverage. If you agree above examples are interesting then think is code coverage addresses them.

Myth#3: Assertion coverage can do the trick

Many designers add assertions into the design for assumptions. Some may argue covering these is sufficient to cover micro-architecture details. But note that if intent assertion is to check for assumption then it’s not same as functional coverage for implementation.

For example let’s say we have simple request and acknowledgement interface between the internal blocks of design. Let’s say acknowledgement is expected within 100 clock cycles after request assertion. Now designer may place assertion capturing this requirement. Sure, assertion will fire error if acknowledgement doesn’t show up within 100 clock cycles.

But does it cover different timings of acknowledgement coming in? No, it just means it has come within 100 cycles. It does not tell if acknowledgement has come immediately after request or in 2-30 clock cycles range or 30-60 clock cycles range or 61-90 clock cycles range or 91-99 clock cycles range and exactly at 100 clock cycles.

However there are always exceptions, there are few designers who do add explicit micro-architecture functional coverage for their RTL code. Even there they restrict scope to their RTL sub-block. The holistic view of complete micro-architecture is still missed here.

Micro-architecture functional coverage: No man’s land

Simple question, who should take care of adding the functional coverage requirements of the micro-architecture to coverage plan?

Verification engineers would argue we lack understanding of the internals of the design. Design engineers lack understanding of functional coverage and test bench.

All this leads to micro-architecture functional coverage falling in no man’s land. Due to lack clarity on responsibility of implementation of micro-architecture functional coverage it’s often missed or implemented to very minimal extent.

This leads to hard to fix bugs only discovered in silicon like Pentium FDIV bug. Companies end up paying heavy price. Risk of this could have minimized significantly with the help of micro-architecture functional coverage.

Both verification and design engineers can easily generate it quickly using library of built-in coverage models from our tool curiosity.