Constrained random verification is one the popular approaches for verifying complex state space designs. Although there has been sufficient focus on the verification methodologies but there is lack of focus on measuring the effectiveness of results delivered by this approach.

Functional coverage is one the key metric used for measuring effectiveness. There are multiple limitations of this metric and how it’s implemented.

- Functional coverage can fundamentally tell you if something is covered but it cannot tell you if the relative distribution of stimulus among features is aligned to your project priorities

- Although black box requirement specification driven coverage is given attention but white box micro-architecture functional coverage is almost ignored or left to mercy of designers

- For coverage metric to stay effective the intent of the coverage should be captured in the executable form. This is possible when we can generate the coverage from executable models of specifications. This approach allows it to evolve and adapt easily to specification changes. Current SystemVerilog covergroup lack firepower to get this done

All these limitations directly affect the functional verification quality achieved. Lower verification quality translates to late discovery of critical bugs.

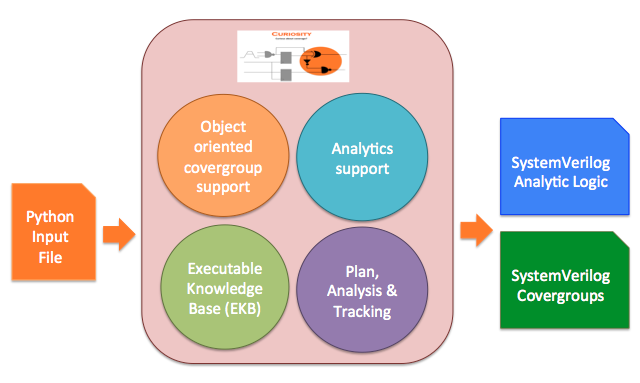

Curiosity framework is designed to address these challenges to help improve the quality of verification. Primary focus of the framework is on, whitebox functional and statistical coverage generation to fill that missing major gap quickly by allowing input specification at higher level of abstraction.

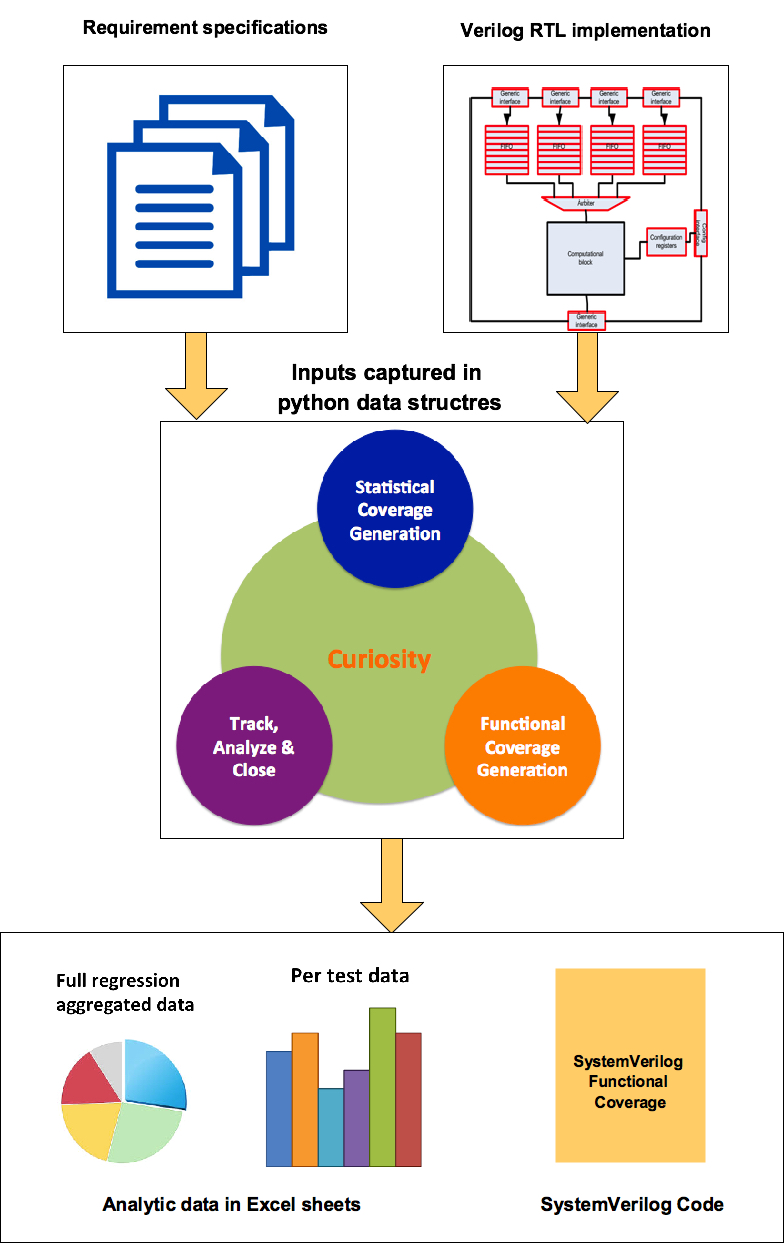

Figure: Curiosity framework flow

Statistical coverage

Functional coverage hit proves that scenario or values being covered has happened in the simulation. Coverage goals can be defined as number of times a particular covergroup needs to be hit. However what you cannot out find from coverage reports is relative distribution of stimulus across the various features.

Statistical coverage helps you address this by providing the relative distribution of the stimulus across various features. This helps you align the stimulus to your project priorities. Aligning the stimulus to your project priorities increases the chances of hitting the bugs that matter.

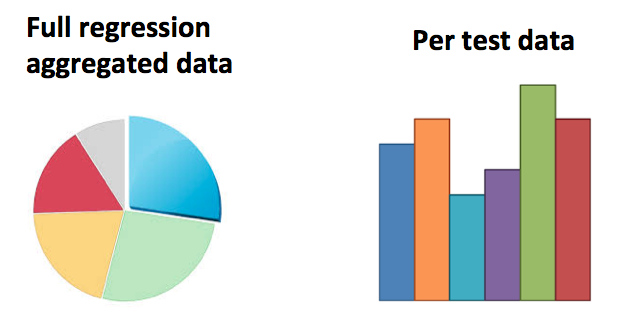

Figure: Illustrative statistical coverage data

For example you can find out what is the percentage of overall simulation time across either a test or regression a FSM is spending in different states. For example this can provide insights into what is total time spent in initialisation, normal operation, low power operations or some modes and configurations. Also imagine being able to find out duration of each clock frequency of dynamic voltage and frequency scaling logic.

Also framework enables you to quickly find out many other types of statistics. Some of them are following:

- Count of any type of events. For example number of times clock gating has taken place.

- Minimum and maximum duration of some signal. This for example can help you find out the

- Minimum and maximum interrupt service latency across regression

- Minimum and maximum duration of a specific timer timeout

- Some events taking place back to back

All these statistics can be found at per test level or across group of tests. This can bring in lot of transparency and clarity especially when you are in last mile of your verification closure.

Download UART based case study.

Whitebox functional coverage

Whitebox functional coverage is often ignored and bites back hardest. It gets ignored because it falls between the boundary of design and verification engineer.

Although designers do write some of the whitebox coverage but it’s mostly written to cover their assumptions. It’s not written with the intent to check for the stimulus quality.

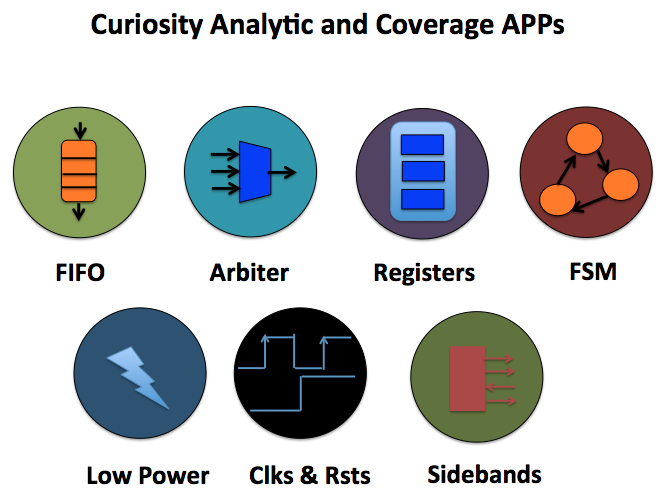

Whitebox functional coverage that framework generates is focused on the assessing the stimulus quality using these standard micro-architecture elements (FIFO, Arbiters etc.) as reference. They are used to figure out whether stimulus is doing what matters to design.

Figure: Some of APPs for micro-architecture coverage generation

For example, when we cover the count of the FIFO EMPTY -> FIFO FULL cycling, focus is not really on the FIFO. We know FIFO is well qualified (is it?) but the logic surrounding FIFO may not be. Idea of this coverage is to see when FIFO goes through these extremes can the surrounding logic deal with it.

Another example is, for simple arbiter we cover whether first request came from different requesters. Please note here designers may not find it interesting from their arbiter design operation point of view. But from traffic generation point of view it might provide us some interesting insights like can the overall system deal with the traffic starting from different streams and have we done it.

Download UART based case study.

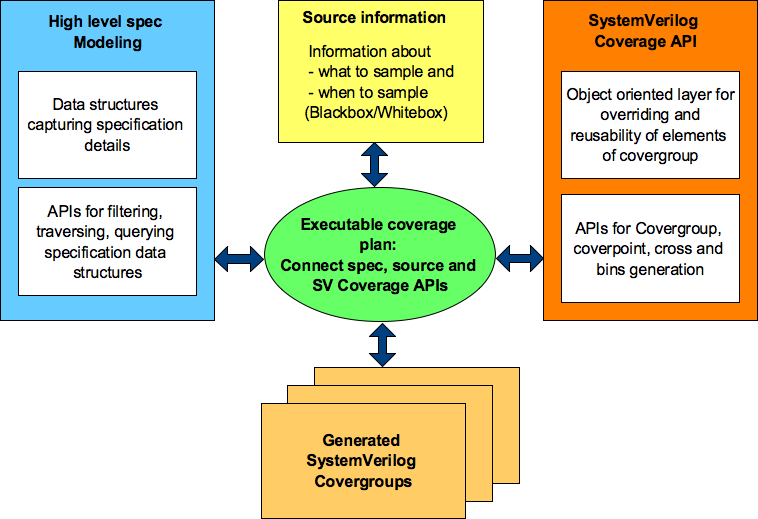

Specification to Functional coverage

Capture the specification in form of data structures. Define bunch of APIs to filter, transform, query and traverse the data structures. Combine these executable specifications with our python APIs for SystmVerilog functional coverage generation. First step towards the specification to functional coverage generation is ready.

Figure : Block diagram of high-level specification model based functional coverage generation

Using these high-level models you can start generating both the functional and statistical coverage. One of the biggest benefits of this approach apart from accelerating the coverage writing process is capturing the intent in executable form.

Download USB power delivery protocol layer coverage case study.

Python libraries

SystemVerilog functional covergroup construct has some limitations, which prevents its effective reuse. Some of the key limitations are following:

- Covergroup construct is not completely object oriented. It does not support inheritance. What it means is you cannot write a covergroup in base class and add, update or modify its behavior through derived class. This type of feature is very important when you want to share common functional coverage models across multiple configurations of DUT verified in different test benches and to share the common functional coverage knowledge

- Without right bins definitions the coverpoints don’t do much useful job. The bins part of the coverpoint construct cannot be reused across multiple coverpoints either within the same covergroup or in different covergroup

- Key configurations are defined as crosses. In some cases you would like to see different scenarios taking place in all key configurations. But there is no clean way to reuse the crosses across covergroups

- Transition bin of coverpoints to get hit are expected to complete defined sequence on successive sampling events. There is no [!:$] type of support where the transition at any point is considered as acceptable. This makes transition bin implementation difficult on relaxed sequences

At VerifSudha, we have implemented a Python layer that makes the SystemVerilog covergroup construct object oriented and addresses all of the above limitations to make the coverage writing process more productive. Also the power of python language itself opens up lot more configurability and programmability.

Reuse is the way you can store your verification knowledge in the executable form by encoding it as configurable high-level coverage models.

Based on this reusable coverage foundation we have built many reusable high level coverage models bundled which increase the abstraction and make the coverage writing easier and faster. Great part is you can build library of high-level coverage models based on best-known verification practices of your organisation.

These APIs allows highly programmable and configurable SystemVerilog functional coverage code generation.

Fundamental idea behind all these APIs is very simple.

Figure : SV Coverage API layering

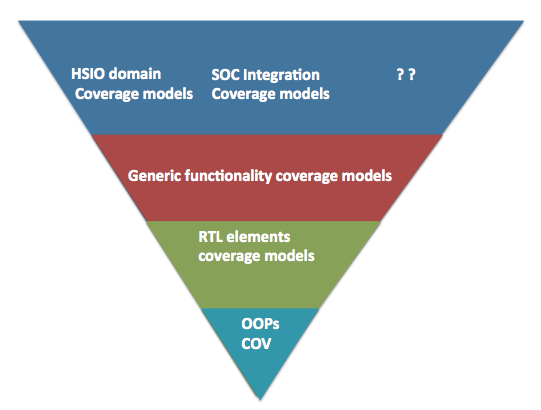

We have implemented these APIs as multiple layers in python.

Bottom most layer is basic python wrappers through which you can generate the functional coverage along with the support for object orientation. This provides the foundation for building reusable and customisable high-level functional coverage models. Description of other layers follows.

RTL elements coverage models cover various standard RTL logic elements from simple expressions, CDC, interrupts to APPs for the standard RTL element such as FIFOs, arbiters, register interfaces, low power logic, clocks, sideband signals.

Generic functionality coverage models are structured around some of the standard high-level logic structures. For example did interrupt trigger when it was masked for all possible interrupts before aggregation. Some times this type of coverage may not be clear from the code coverage. Some of these are also based on the typical bugs found in different standard logic structures.

At highest-level are domain specific overage model. For example many high-speed serial IOs have some common problems being solved especially at physical and link layers. These coverage models attempt to model those common features.

All these coverage models are easy to extend and customise as they are built on object oriented paradigm. That’s the only reason they are useful. If they were not easy to extend and customise they would have been almost useless.

Input and Output

Curiosity framework takes input in the form of python code and generates the output in the form of SystemVerilog code.

Figure: Curiosity framework Input and Output

Full power of python can be utilized by combining it with set of framework’s pre-defined python objects and APIs for generating the functional coverage, statistical coverage and required monitors.

Generated code needs to be compiled with test bench. Generated functional coverage works like any other user written functional coverage. Coverage results appear in regular functional coverage reports generated by the simulator.

However the framework handles the statistical coverage reports generation. The compiled code has built-in functionality to dump the additional coverage database for statistical coverage. This statistical coverage database is different from simulator’s standard functional coverage database.

Similar to simulator the framework aggregates the statistical coverage from every test using its database at the end of regression. Results of statistical coverage are summarised in the excel format for both per test and full regression level.

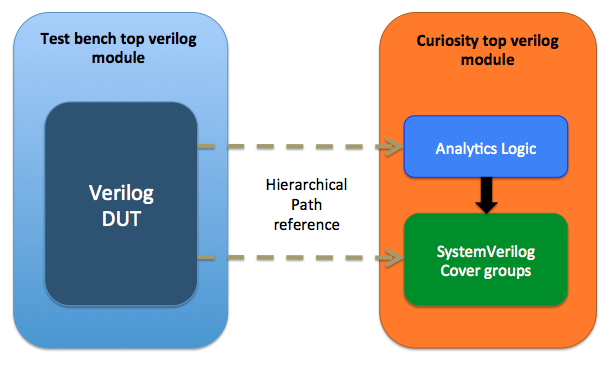

Integration of Whitebox coverage

Whitebox code generated including both monitors and coverage can be integrated without touching the RTL or test bench. It relies on the hierarchical path references to design to snoop the information directly from design. Don’t worry hierarchical paths are quarantined and managed to ensure the impact of any hierarchy changes is minimal.

Figure: Integrating the curiosity generated code in test bench

A SystemVerilog module is generated which acts as container for all the monitors and coverage. This module is compiled at the same hierarchy as your test bench top and simulated along with it. That’s it. So all you need to do is to include this additional module to your compile.