What? We don’t even have any functional coverage and you want us to write functional coverage for bug fixes?

Ah!.. That’s nice try but sorry we don’t have time for that. May be next project, when we have some extra cycles we will certainly revisit it.

We are very close to meeting our code coverage targets. So I guess we are good here. We don’t have time for wishy-washy functional coverage.

Fair enough. We hope you are aware of limitations of the code coverage.

Oh, yeah, we are aware of those. Anything new?

Let’s see.

How about bugs? Are you finding any new bugs even after almost closing code coverage goals?

Awkward pause for few moments, some throat clearing. Yes, we do.

Well, we can consider the design gold standard till we don’t discover the bugs in it. We can all assume code coverage is good enough and RTL quality is great. But the moment we discover bug even after code coverage closure that assumption breaks down. We can no longer hide behind that.

There is never a single cockroach

Lets just presume emulation or SOC verification team reports a bug that should have been ideally caught at unit verification. A bug found in a very specific configuration and specific scenario. Should the unit verification just recreate that scenario in that specific configuration in unit verification and say it’s done?

We can but first would like to quote my boss from early days of my career. Every time we reported saying we discovered and fixed a bug, he would say “there is never a single cockroach”. Just think back have you ever seen only one? There are always more if you look around. What does this mean in this context?

Think about it. We can consider, RTL with 100 % code coverage as innocent till a bug is discovered in it. After the bug is discovered it’s guilty and has to face the trial. Thorough investigation must be performed.

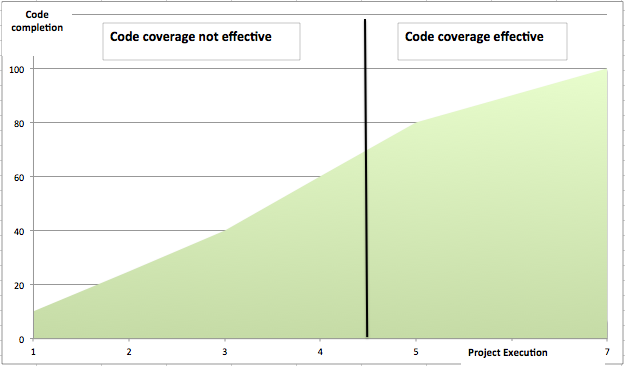

Even if you did not create a comprehensive functional coverage plan as part of initial verification planning now is time to rethink about it. No, no need to rush to create one now. That’s not going to help much. Typically the resource and time at this point are well spent in verifying. We don’t want them to go on systematic hunt for new weak areas with comprehensive functional coverage plan.

Bugs are already hinting where there is weakness in design. Now the question is how do we use it to reduce the further risk?

How to verify bug fixes?

Verification of the bug fixes is very similar to filling the potholes.

A pothole filling is not effective, if you just pour asphalt right into the pothole and move on. It’s not going to hold up. The right way to do it would be to first cut out the loose areas around the pothole. Clear any debris inside pothole. Now pour asphalt. Roll it nice and clean. If you do this then you have greater chances that it will hold good.

Similarly while verifying the bug fixes consider widening and deepening the verification scope a bit. Remember there could be more bugs hiding around or behind this current bug. Statistics say every five bug fixes introduce a new bug.

Functional coverage is important now as this bug has escaped the radar of code coverage. I hope you are now convinced. Even if you had avoided functional coverage so far now is the time to bring it into play. Set the clear scope for the bug verification using the functional coverage around the area where the bug was discovered. Remember the cost of the bug found increase as time passes.

Sounds good. But we are really resource and time constrained. That isn’t going away even if we agree.

If you really want to do it right then our tool curiosity can help. It can help you generate the whitebox functional coverage 3x-5x faster. Generated code requires no integration and compiles out of the box. So you get to coverage results faster. Faster results provide more time for verification. More time for verification of bugs results in better quality of RTL.

What do you say?