We have heard technical debt but what is this functional coverage debt? It’s on the same lines as technical debt but more specific to functional coverage. Functional coverage debt is absence or insufficient functional coverage accumulated over years for silicon proven IPs.

Can we have silicon proven IPs without functional coverage? Yes, of-course. The designs have been taped out successfully without coverage driven constrained random verification as well. Many of the implementations, which are decades old and still going strong, have been born and brought-up in the older test benches.

These were plain Verilog or VHDL test benches. Let’s not underestimate the power of these. It’s not always about swords but also about who is holding them.

Some of these legacy test benches may have migrated to the latest verification methodologies and high-level verification languages(HVL). Sometimes these migrations may not be exploiting the full power the HVLs offer. Functional coverage may be one of them.

Now we have legacy IPs, which have been there for some time and have been taped out successfully multiple times. Now mostly undergoing bug fixes and minor feature updates. Lets call these as silicon proven mature IPs.

For silicon proven mature IPs is there a value in investing for full functional coverage?

This is tough question. The answer will vary case-by-case basis. Silicon proven IPs are special because they have real field experience. It has faced real life scenarios. Bugs in this IPs have been found over years the hard way through the silicon failure and long debugs. They have paid high price for their maturity. They have learnt from their own mistakes.

Bottom-line is they have now become mature. Irrespective of whether proper test cases were written for the bugs found in the design or not after bug discovery, now they are proven and capable of handling various real life scenarios.

So for such mature IPs when the complete functional coverage plan followed by their implementation is done the results can be bit of surprise. Initially there can be many visible coverage holes in verification environment. This is because such IP may have relied on silicon validation and very specific set of directed test scenarios to reproduce and verify the issue in test bench.

Even when many of these coverage holes are filled with the enhancement to test bench or tests, it may not translate to finding any real bugs. Many of these hard to hit bugs have been already found hard way with silicon.

Now the important question, so what is the point of investing time and resources for implementing functional coverage for these?

It can only be justified by calling it paying back technical debt or functional coverage debt in this case.

Does every silicon proven mature IP need to pay back the functional coverage debt?

It depends. If this IP is just going to stay as is without much of change then paying debt can further pushed out. It’s better to time it to discontinuity. The discontinuity can be in the form of major specification updates or feature updates.

When the major feature upgrades takes place the older part of the logic will interact with the newer parts of the logic. This opens up possibility of new issues in both older part of the logic, new logic and cross interaction between them.

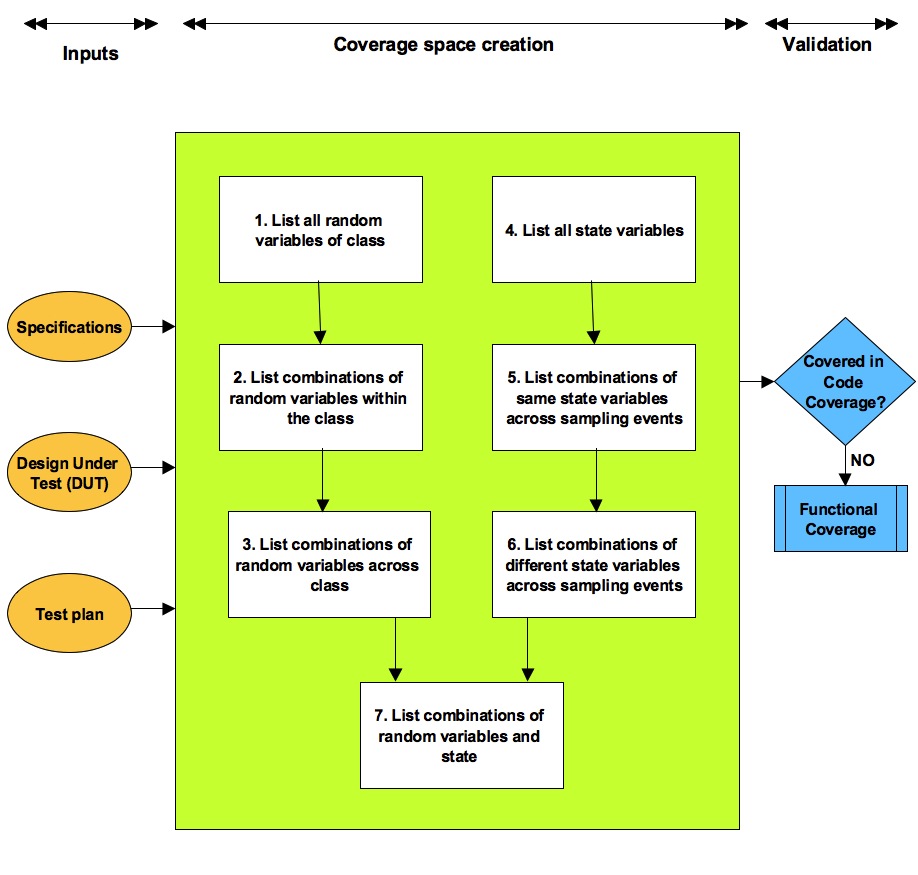

So why not just write functional coverage for the new features?

When there isn’t functional coverage et all there is lack of clarity on what has been verified and to what extent. This is like lacing GPS co-ordinates about current position. Without clearly knowing where we are currently it’s very difficult to reach our destination. When it’s done in patchy way the heavy price may have to be paid based on the complexity of the change. For cross feature interaction type of functional coverage the existing feature functional coverage is also required.

Major updates are right opportunity to pay the functional coverage debt and set things right. Build the proper functional coverage plan and implement the functional coverage ground up.

There will be larger benefits for newer features immediately. For older features functional coverage will benefit the future projects on this IP as it will serve as reference about quality of current test suite. Even when the 100 % functional coverage is not hit, there is clarity on what is being risked. Uncovered features would be informed risk rather than gamble.

Conclusion

For silicon proven IPs the value of functional coverage is very low if the IP is not going to change any more. What was taped out just stays, as is then there is no need to invest in functional coverage.

If the IP is going to change then the quality achieved is no longer guaranteed to be same after the change

- With the functional coverage it ensures the functional verification quality carries over even with changes in design

- Ex: A directed test that was creating scenario for certain FIFO depth may not create the same when FIFO depth is changed

- Unless this aspect is sensitive in code coverage this can get missed without functional coverage in next iteration

- Also for new feature updates it may be difficult to write functional coverage unless there is some base layer of functional coverage for existing features to cover the cross feature interaction

- Ex: A directed test that was creating scenario for certain FIFO depth may not create the same when FIFO depth is changed

For silicon proven IPs the functional coverage implementation is paying of “technical debt” in that project but for new projects

- Provides ability to hold on to same quality

- Provides the base layer to build the functional coverage for new features to utilize its benefits from early on