World of dynamic simulation based functional verification is not real. Verification architects abstract reality to create the virtual worlds for design under test (DUT) in simulation. Sometimes it reminds me of the architectures created in the movie inception. It’s easy to lose track of what is real and what is not. This leads to critical items being missed in the functional coverage plan. In this article we will look at three point of views to look at feature while doing functional coverage planning to increase chances of discovering those critical scenarios.

Huh..! In the world of functional verification how do I know, what is real and what is not?

In simple words if your design under test (DUT) is sensitive to it then its real and if it’s not sensitive to it then it’s not real. Requirements specification generally talks about the complete application. Your DUT may be playing one or few of the roles in it. So it’s important to understand what aspects the application world really matter to your design.

The virtual nature of the verification makes it difficult to convey the ideas. In such cases some real life analogies come in handy. These should not be stretched too far but should be used to make a focused point clear.

In this article, we want to talk about how to look at the requirements specifications and micro-architecture details while writing coverage plan. We want to emphasize the point micro-architecture details are equally important for functional coverage plan. Often verification teams ignore these. It affects the verification quality. Let’s look at the analogy to specifically understand why micro-architecture details matter to verification engineer? How much should he know? Let’s understand this with analogy.

Analogy

Let’s say patient approaches orthopedic surgeon to get treatment for severe back pain. Orthopedic surgeon prescribes some strong painkillers, is it end of his duty? Most painkiller tablets take toll on stomach and liver health. Some can even trigger gastric ulcers. What should orthopedic surgeon do? Should he just remain silent? Should he refer him to gastroenterologist? Should he include additional medications to take care of these side effects? In order to include additional medications to take care of side effects how far should orthopedic surgeon get into field of gastroenterology? Reflect on these based on real life experiences. If you are still young then go talk to some seniors.

The same dilemma and questions arise when doing functional coverage planning. Functional coverage planning cannot ignore the micro-architecture. Why?

When the requirements specifications are translated to micro-architecture, it introduces its own side effects. Some of these can be undesirable. If we choose to ignore it, we risk those undesirable side effects showing up as bugs.

Dilemma

Well we can push it to designers saying that they are the ones responsible for it. While it’s true to some extent but only when designer’s care for verification and verification cares for design first pass silicon dreams can come true. We are not saying verification engineers should be familiar with all intricacies of the design but the basic control and data flow understanding cannot be escaped.

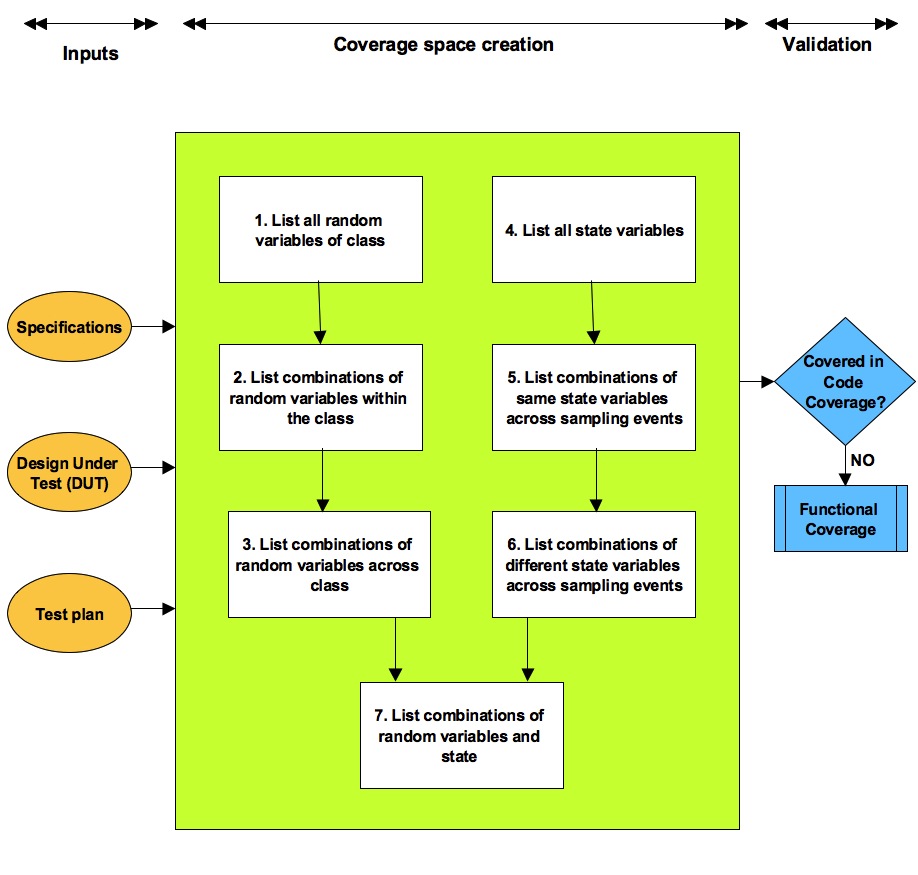

Functional coverage planning: 3 points of view

All the major features have to be thought out from three points of views while defining functional coverage. There can be certain level of redundancy across these but this redundancy is essential for the quality.

Those three points of view are:

- Requirements specification point of view

- Micro-architecture point of view

- Intersection between requirements and micro-architecture point of view

For each of these how deep to go is dependent on the sensitivity of the design to the stimulus being thought out.

Let’s take an example to make this clearer.

Application of 3 points of view

Communication protocols often rely on timers to figure out the lost messages or unresponsive peers.

Let’s build simple communication protocol. Let’ say it has 3 different types of request messages (M1, M2, M3) and single acknowledgement (ACK) signaling successful reception. To keep it simple unless acknowledged the next request is not sent out. A timer (ack_timer) is defined to takes care of lost acknowledgements. Range of timer duration is programmable from 1ms to 3ms.

In micro-architecture this is implemented with simple counter that is started when any of requests (M1, M2, M3) is sent to peer and stopped when the acknowledgement (ACK) is received from peer. If the acknowledgement is not received within the pre-defined delay a timeout is signaled for action.

So now how do we cover this feature? Let’s think through from all three points of view and see what benefits do each of these bring out.

Requirements specification point of view:

This is the simplest and most straightforward among the three. Coverage items would be:

- Cover successful ACK reception for all three message types

- Cover the timeout for all three message types

- Cover the timeout value for Min(1 ms), Max(3 ms) and middle value( 2ms)

Don’t scroll down or look down.

Can you think of any more cases? Make a mental note; if they are not listed in below sections, post them as comments for discussion. Please do.

Micro-architecture point of view:

Timer start condition does not care about which message type started it. All the message types are same from the timer point of view. From timer logic implementation point of view, timeout due to any one message type is sufficient.

Does it mean we don’t need to cover timeout due to different message types?

It’s still relevant to cover these from requirements specification point of view. Remember through verification we are proving that timeout functionality will work as defined by specification. Which means, we need to prove that it will work for all three-message types.

Micro-architecture however has its own challenges.

- Timeout needs to covered multiple times to ensure the mechanism of timer reloading takes place correctly again after timeout

- System reset in the middle of timer running followed by timeout during operation needs to be covered. It ensures that system reset in middle of operation does reset the timer logic cleanly without any side effects (difference between power on reset vs. reset in middle)

- If the timer can be run on different clock sources it needs to be covered to ensure it can generate right delays and function correctly with different clock sources

Requirement specification may not care about these but these are important from micro-architecture or implementation point of view.

Now let’s look at intersection.

Intersection between requirements and micro-architecture point of view

This is the most interesting area. Bugs love this area. They love it because of intersection, shadow of one falls on another creating dark spot. Dark spots are ideal places for the bugs. Don’t believe me? Let’s illuminate and see if we find one.

Generally synchronous designs have weakness around +/- 1 clock cycle around key events. Designers often have to juggle lot of conditions so they often use some delayed variants of some key signals to meet some goals.

Micro-architecture timeout event intersecting with external message reception event is interesting area. But requirements specification cares for acknowledgement arriving within timeout duration or after timeout duration.

What happens when acknowledgement arrives in following timing alignments?

- Acknowledgement arrives just 1 clock before timeout

- Acknowledgement arrives at the same clock as timeout

- Acknowledgement arrives just 1 clock after timeout

This is area of intersection between the micro-architecture and requirement specification leads to interesting scenarios. Some might call these as corner cases but if we can figure out through systematic thought process, shouldn’t it be done to the best possible extent?

Should these cases of timing alignment be covered for all message types? Its not required because we know that timer logic is not sensitive to message types.

Another interesting question is why not merge the point views #1 and #2? Answer is thought process has to be focused on one aspect at a time, enumerate it fully and then go to other aspect enumerate it fully then go figure out the intersection between them. Any shortcuts taken here can lead to scenarios being missed.

Closure

This type of intent focused step-by-step process enhances the quality of functional coverage plans and eases the maintenance when features are updated.

Some of you might be thinking, it will take long time and we have schedule pressure and lack of resources. Let’s not forget quality is remembered long after the price is forgotten.