Functional coverage is one of the key metrics for measuring functional verification progress and closure. It complements by addressing the limitations of the code coverage. Functional coverage is one of the key factors contributing to quality of the functional verification.

Code coverage holes are typically closed first followed by the functional coverage. So remember functional coverage is one of those last gates to catch the uncovered areas. After the design is signed off from functional coverage point of view, it’s one step closer to tapeout from functional verification point of view.

Once the functional coverage is signed off, bugs hiding in the uncovered areas will most likely get discovered in silicon validation. The cost of bug increases significantly when its caught later in the ASIC verificaiton cycle. This emphasizes the importance of functional coverage. Please note functional coverage is not directly catching the bugs. It helps illuminate the various design areas to increase the probability of bugs being found. To make best use of it we need to understand different types of functional coverage.

Functional coverage is implementation of the coverage plan created in planning phase. Coverage plan is part of, verification plan. It refers primarily to two sources of information for its verification requirements. Requirements specification and micro-architectural specification of the implementation. Functional coverage should address both of them.

There are two types of functional coverage, black box and white box created to address both the requirements.

Let’s look at them in more details.

Black box functional coverage

Functional coverage addressing the requirements specifications is referred to as black box functional coverage. It is agnostic to the specific implementation of requirements. It will not be dependent on micro-architectural implementation.

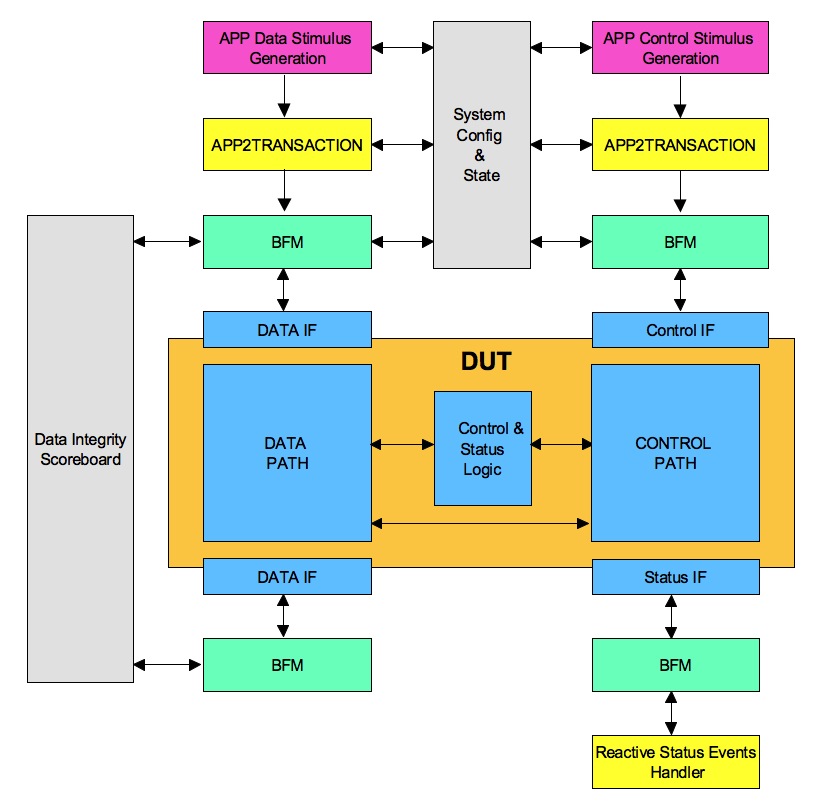

Typically it’s extracted with the help of various test bench components. It also represents the coverage in the form of design’s, final application usage.

Lets understand this better with simple example. Application usage, in processor world would mean instructions. One of the area for functional coverage would be to cover all the instructions and in all their possible programming modes like registers, direct memory, indirect memory etc. Another example from peripheral interconnect world, one of the coverage item can be to cover all the types of packets exchanged with various legal and illegal values for all the fields of packets.

One of the best way to write black box functional coverage is to generate it from the specifications. This allows the intent of the coverage items to be preserved allowing the functional coverage to automatically evolve with the specification. Find out how you can take first step in specification to functional coverage.

White box functional coverage

Functional coverage covering the micro-architectural implementation is referred to as white box functional coverage.

White box verification and its functional coverage is one of the under focused area. This is due to reliance on standard code coverage to take care of it. Verification engineers typically leave this space to be addressed by the design engineers. Design engineers do try to take care of this by adding assertions on assumptions and test point to see if the scenario of interest to implementation are covered.

But for design engineers this is additional work among many others tasks. Thus it ends up not getting the focus desired. This can sometime lead to very basic issues getting discovered in this area very late in game.

White box functional coverage will be dependent on the specific design implementation. Typically, it will tap into internal design signals to extract the functional coverage. This tapping can be at design’s interface level or deep inside the design.

Lets understand this with simple example. One of the white box coverage item in processor world can be instruction pipelines. Covering all possible instruction combinations taking place in instruction pipelines. Note that, this will not be addressed by code coverage.

In peripheral interconnect world it can be the FIFO’s in data path. Covering different levels utilizations, including the full conditions. Transitions from empty to full to empty. Covering errors injected at certain internal RTL state. Covering number of clocks an interface experienced the stall. Covering all possible request combinations active at the critical arbiter interface. These are to name few cases. A simple LUT access coverage could have helped prevent famous pentium FDIV bug.

White box coverage writing effort depends on complexity and size of the design. White box coverage writing effort can be reduced up to 3x easily by generating them instead of writing. Generation can happen as part of plug-ins in RTL or using framework like curiosity.

White box functional coverage and black box functional coverage can have some overlapping areas. There will bit of white box functional coverage in black box functional coverage and vice versa. Right balance of both black box and white box functional coverage provides the desired risk reduction and helps achieve the high functional verification quality.