RISC-V open virtual platform simulator quoted “Silicon without software is just a sand”. Well, it’s true. Isn’t it?

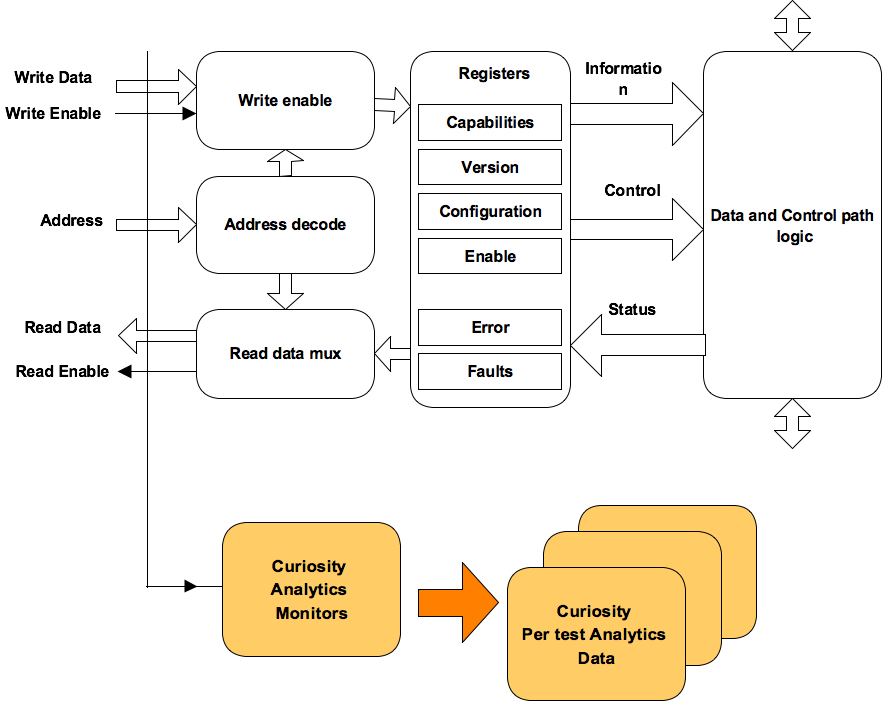

For many design IPs, the programming interface to the software is through a set of registers. The registers contained by design IP can be broadly divided into 3 categories.

- Information registers: Set of registers that provides the static information about the design IP. Some examples are vendor ID or revision information or capabilities of the design

- Control registers: Set of registers that allow controlling the behavior or features of the design IPs. These are called control registers. Some examples include enable/disable controls, thresholds, timeout values etc.

- Status registers: Set of registers that provides the ability to report various events. Some examples are interrupt status, error status, link operational status, faults etc.

The software uses the “information registers” for the initial discovery of the design IP. There on programs the subset of control registers in a specific order to make the design IP ready for the operation. During the operation, status registers provide the ability for the software to figure out if the design IP is performing the operations as expected or does it need some attention.

Register verification

From verification point of view we need to look at it from two points of view. They are:

- Micro-architecture point of view

- Requirement specification point of view

Micro-architecture point of view focuses on the correctness of the implementation of the register structure. Which can be described some of the following items:

- Each register bit properties implemented as read-only, read-write, write to clear, read to clear or write-1-clear type of implementation

- Is the entire register address space accessible for both read and write

- If there are any byte enables used are they working correctly

- All possible read and write sequences operational

- Protected register behavior is as expected

From requirements point of view focuses is on the correctness of the functionality provided by the register. Which can be described some of the following items:

- Whether the power on reset value matches the expected value defined by the specification

- For all the control registers whether the programmed values are having the desired effect

- When the events corresponding to different status register updates are taking place, whether they are reflecting it correctly

- Any status registers whose values needs to be retained through the reset cycling

- Any registers that needs to be restored to proper values through power cycling are they taking place correctly

Micro-architecture implementation correctness is typically verified by the set of the generated tests. Typically a single automation addresses the RTL registers generation, UVM register abstraction layer generation and associated set of test generation. These automations also tend to generate the documentations about registers, which can serve as programming guides for both verification and software engineering teams.

Functional correctness of the registers is the more challenging area. Information category of registers is typically covered by the initial values checking tests. Control and status register functional correctness is spread out across various tests. Although some tests may explicitly verify the register functional correctness, but many tests that cover register functional correctness are not really verifying only that. They are focusing on higher-level operational correctness, in doing so, they utilize the control and status registers. Hence they verify them indirectly.

In spite of all this verification effort, register blocks still will end up having some issues, which are found late in verification cycles or during silicon bring up.

Why we are still missing the issues?

Register functionality evolves throughout the project execution. Typical changes are in the form of additions, relocations, extensions, update to definitions of existing registers and compacting the register space by removing some registers.

Automations associated with registers generation ease the process of any changes. At the same times, sometimes layers of automations can make the process of review difficult or give a false sense of positive security that all changes are being verified by automatically generated tests. The key point is automation is only as good as the high-level register specification provided as input. If there are mistakes in the input, sometimes automations can mask them.

Since the register verification is spread out across automated tests, register specific tests and other features testing, its difficult to pin-point what gets verified and to what extent.

What can we do about it?

First is traditional form of review. This can help catch many of the issues. But considering the total number of registers and their dynamic nature it’s difficult to do this review thoroughly and iteratively.

We need to aid the process of reviews. We need to open up the verification to questioning by designers and architects. This can be effectively done when there are high-level data about the verification done on the registers.

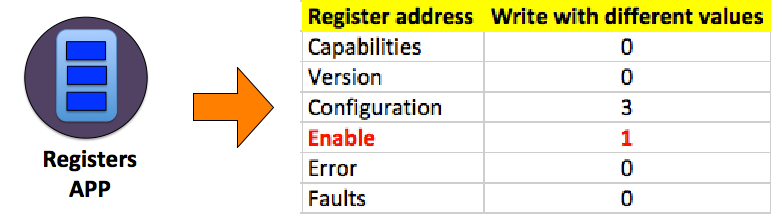

We have built a register analytics app that can provide various insights about your register verification in the simulation.

One of the capabilities register app helped catch issues of dynamically reprogrammed registers. There are subsets of control registers that can be programmed dynamically multiple times during the operations. As the register specification kept on changing the transition coverage on a specific register which was expected to be dynamically programmed was not added.

Our register analytics app provided the data regarding which registers were dynamically reprogrammed, how many times they were reprogrammed and what unique value transitions were seen. It was made available as a spreadsheet. One could quickly filter the columns of registers that were not dynamically programmed. This enabled questioning of why certain registers were not dynamically reprogrammed? This enabled catching certain dynamically re-programmable registers, which were not reprogrammed. When they were dynamically reprogrammed some them even lead to the discovery of additional issues.

We have many more micro-architecture stimulus coverage analytics apps that can quickly provide you the useful insights about your stimulus. The data is available both at per test as well as aggregated across the complete regression. Information from third party tools can additionally provide some level or redundancy to verification efforts catching any hidden issues in the automations already used.

If you are busy, we do offer services to set up our analytics flow containing both functional and statistical coverage, run your test suites and share the insights that can help you catch the critical bugs and improve your verification quality.