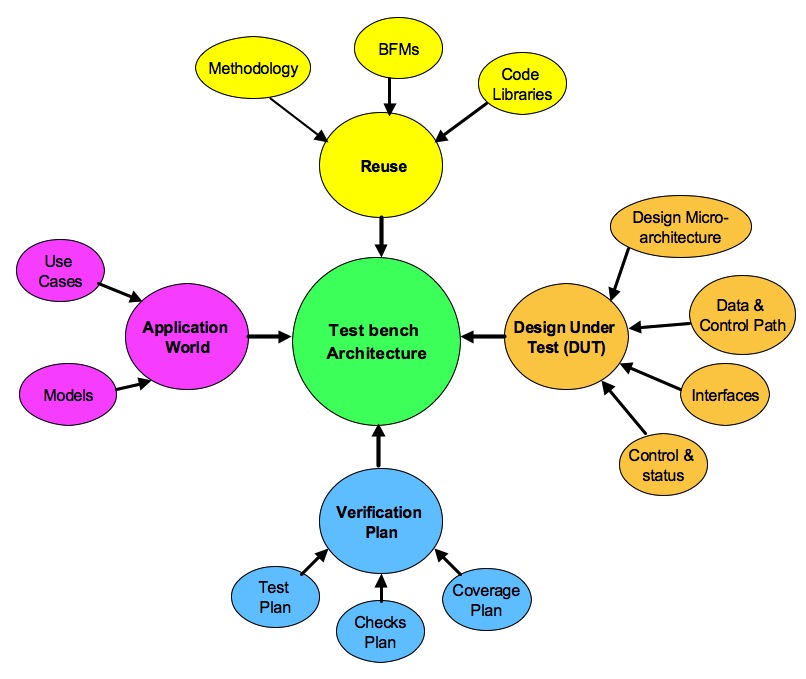

Verification plan review is still a manual process. Verification plan is made up of three plans Test plan, Checks plan and Coverage plan. As of today’s technology, I am not aware of any tools to do automatic verification plan review based on machine learning.

First challenge in the verification plan review is to have a verification plan. Second challenge is to make it a traceable verification plan so that it can be trusted.

What home work is required from verification plan reviewer?

Verification plan building requires specification expertise and verification expertise. Verification plan writer gets time to do a detailed scan of the requirement specification to write the verification plan.

The challenge for the verification plan reviewer will not be able to get same amount of time. Verification plan reviewer will still have to do the home work to make the review effective.

(more…)