Checks or assertion plan

We have already seen verification is about two things. They are stimulus generation and checks. Test plan would exclusively focus on the stimulus generation. Coverage…

We have already seen verification is about two things. They are stimulus generation and checks.

Test plan would exclusively focus on the stimulus generation. Coverage plan focuses on coverage of intended scenarios through stimulus generation listed in test plan. Checks plan lists the set of the checks performed during the test execution in various components of the test bench.

A check plan lists the checks looking at requirements from two key perspectives:

- Is DUT doing, what it should do? This is a positive check. It’s checking is DUT doing what it is expected to do.

- Is DUT doing, what it should not do? This is a negative check. It’s checking is DUT doing anything that it is not expected to do or for any unintended side effects after doing what it’s expected to do.

Verification requirements for building the checks plan comes from:

- Requirements specifications – Used for Test plan and Coverage plan

- Design under test (DUT) micro-architecture specifications

Like digital logic is primarily made up of combinational and sequential logic, checks are also of two types. First category is immediate checks. Immediate checks are done based on current information available. Second category is state based checks. State based checks require state information about what has happened in the past to do the current check. Their validity is based on the context understood by the state.

Location of check implementation

DUT’s interface to external world can be made up of multiple buses and signals. Each bus as described in the test bench architecture will have BFM associated with it to handle it.

Most of that interface specific checks are implemented in the BFM. If there are any additional information required to implement the check, which the BFM does not contain, will be typically passed to BFM through the configuration or transactions or APIs. The checks dependent on multiple interfaces are implemented in the test bench components.

It’s well known that all checks should be enabled by default. There is a catch there. It should be noted that checks requiring the user initialization to work should be started out as warning till they are setup by user. When it’s not done these check results in false failure due to lack of setup by user. This can result in distraction especially during the early bring up stage of the DUT.

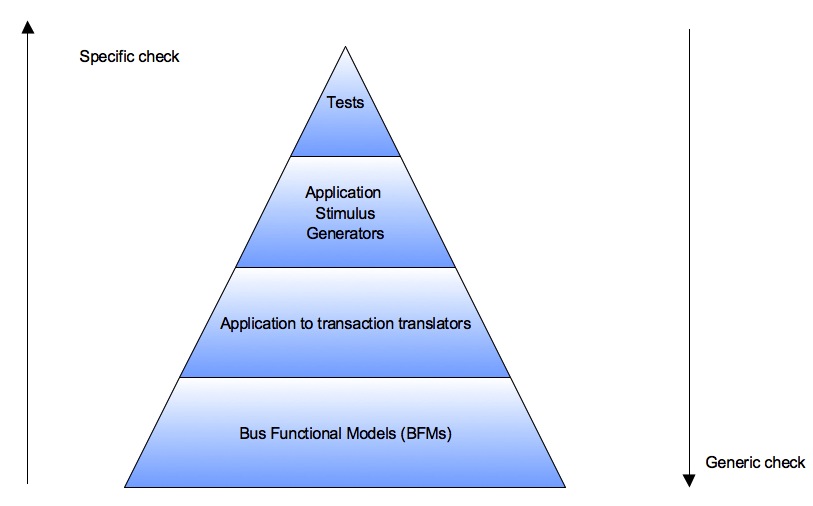

Generic checks will be lower in the check pyramid. As the checks become more dependent on the stimulus and scenario they move up the pyramid. Finally test scenario specific checks are implemented in the test. Test specific checks should be minimized, as their usefulness is limited to only that specific test. Even when test specific checks have to be implemented see if it can be broken up into smaller checks and parts of it can implemented in the test bench.

Sometimes while defining the checks plan you may across a check that may not be possible to implement in test bench and may have to go in as assertion inside the DUT. Still go ahead and enumerate it.

Traceability of checks

Every check should be accountable. For functional checks there should be way to correlate them to the checks plan through unique ID. Using this ID check’s severity should be configurable. It should be possible to upgrade or downgrade it by controlling it through the configuration or command line argument. Whenever check fails error message should be logged with this unique check ID.

Meaningful error message should be associated with the check to be displayed along with failure. Writing cryptic error messages is like sentencing “debug” hang till death. Error messages provide vital clues and insights into nature of failure and set direction for debug. If they are not clear, already heavy activity like debug becomes even heavier. A high quality error messages makes two things very clear:

- What was expected by the check

- What has actually happened

Along with this including any additional information to trace to cause of problem is extremely valuable. It’s worth adding few extra lines of code to track some additional state to help trace the issues faster. Any investment early on to make debugs easier pays off very well in the regression phase.

Checks in BFM vs. Bus monitor

Bus interface related checks should it be done in bus functional models or design a dedicated bus monitor is debatable question.

Classic verification literature seems to favor an independent bus monitor. Independent bus monitor if done right by a separate team provides a benefit of another specification interpretation providing the redundancy those guards against the misinterpretations.

Bus monitor improves the reusability. Provides more accurate data for the scoreboard and functional coverage. Keep in mind monitor is extra effort and time investment. Because for implementing the state based checks the state has to be recreated and effort might come close to implementing a bus functional model. One can argue for reusing the code from BFM but then it defies the purpose of building redundancy in verification.

Error injection handling in monitor can become big pain due to lack of the context of error injection in monitor. When checks are implemented in the BFMs, it’s easy for them do disable some of checks during error recovery and re-enable them back after error recovery.

Checks plan writing process

There is no formal process for identifying the checks as described in the writing process for test plan and coverage plan.

In order to help with the checks plan writing process the different type of checks are identified below. This provides the reference for identifying the check types applicable to DUT under consideration on the same lines.

Signal interface checks

DUT signal interface level checks can be of three types.

They are:

- Signal value checks: Checking various signals for the illegal values such as ‘X’ and ‘Z’ etc.

- Signal timing checks: Signals assertion and de assertion timing checks. Timing defined as certain number of clock cycles or absolute duration of time

- Signal timing checks: Checking relation between different signals. Examples like two signals asserted at same time, acknowledgement signal getting asserted within N cycles after assertion of request signal etc.

These signal checks can be present within DUT, interface abstraction constructs and lower layers of BFMs handling the interface. Assertions constructs are best suited for implementing signal level checks.

Bus protocol checks

Bus protocols are set of rules defined for communication between the peers. Communication can be full duplex or half duplex. These protocols are built on set of transaction exchanges between the peers after link initializations. Different types of transactions are defined for control and data information transfer.

Each of the rules typically called out through “should” in the standard specification must be translated to checks. These checks can be implemented in the monitors or BFMs. These are implemented on the receive path within every layer of the communication protocol implementation.

Checks are classified in to checks on fields of transactions and order or sequence of the transactions.

Check on order of the transaction, checks if the received transaction type received is expected. If the transaction type received is expected then all its fields are checked to see if they contain the expected value.

Checks on expected field types and values could be constants or dependent on the state and configuration of the layer.

Unexpected transactions or expected transaction with unexpected field values should be flagged as error.

Data transformation checks

Design under test (DUT) can be thought of as transfer function. It transforms data from one form to another. This cannot be independently checked by the BFMs connected to buses by themselves. In many cases it requires gathering data from multiple interfaces to implement the checks.

The input reference data and output actual data can be from different interfaces and in different forms before and after transformation from DUT.

The checks on the correctness of data transformation and integrity are one of the complex checks implemented in the test bench. This component implementing check is called scoreboard.

This concept of scoreboard is also used for implementing checks on the DUT’s status interfaces such as interrupts.

Application scenario checks

Many verification environments may abstract the application world to improve the controllability and observability. This also means many test scenarios may not exactly match application usage.

To fill this gap specialized tests mimicking complete application behavior are created. Now the test bench may not implement some of the checks required for these scenarios. The checks required for these may not hold good for many other tests. Such checks instead of being part of test bench they are made part of the respective tests.

Example could be in designs containing a micro-controller, most of the tests are run without using production firmware while few tests are run with production firmware. While running with production firmware some additional checks required are implemented in the respective checks instead of the test bench, which is predominantly designed to run without production firmware.

Performance checks

Apart from functionality, designs have performance goals. Performance is defined differently for different designs. For communication interface designs it is typically latency and bandwidth goals.

Test bench components are created to track the latency and bandwidth of the design interface. Latency checks can be active all the time while the bandwidth checks can kick in at the end of the test.

Performance checks need not be enabled for all the tests. For example enabling latency checks during the error injection tests may not be meaningful. It can lead to false failures.

During only performance use cases performance checks should be enabled. Checks should allow the configurability and compute the expected values for expected performance targets to improve the adaptability to the design changes.

Low power checks

Apart from functionality, designs have power goals. Low power implementation is combination of design functionality independent and design functionality dependent logic structures.

Design functionality independent logic is structural implementations such as clock gating, power gating, frequency and voltage scaling etc. There are third party static tools to check for static correctness of these implementations.

Design functionality dependent techniques part of the logic, monitors the blocks power requirements and activates the structural low power elements to save the power. To verify these targeted tests have to be written and run them in the power aware simulations. These tests can have test specific checks to check for the correctness of the implementation.

I don’t know how to check if the overall power targets are met through dynamic simulation.

Clocks and resets checks

Multiple clock domains and resets are norm these days for designs. Different types of clocks and complex reset sequencing are required to bring up the designs.

Checks on the clocks characteristics such as frequency, duty cycle, jitter, spread spectrum have to be implemented in the test bench.

Checks on the reset sequencing order, timing between the reset releases and status of units post reset have to be implemented in the test bench.

Register checks

Registers are used for implementing the control and status logic of the design. Register structure is combinations of the design functionality independent logic and design functionality dependent logic.

Design functionality independent logic verification involves being able to access each of the registers, ability to exercise the attributes of each register bit as per the specification and initial value checks etc. These checks are typically implemented as part the tests verifying these features. Although some design specific information such as address map, register attributes or initial values are needed but the nature of checks performed can be configurable that can be used across multiple designs.

Design functionality dependent logic verification is typically distributed across tests. Control registers programming is typically verified by the expected behavior during various tests after configuration. Checks in different test bench components use the configuration containing information about control registers values programmed to implement the checks on the behavior of the DUT.

Status registers checking for the expected values are typically done as part of reactive components of test bench (such as interrupts handling) or inside the test implementation. Unexpected status register values are typically checked at the end of the test. For example error status register getting updated at the end of the normal operation test.

Self checks for reusable blocks integration

There are several reusable RTL as well test bench components reused. Since these are reusable components it makes sense to invest in making them self-checking for their correctness of integration. This can save lot of debug effort later.

These checks can be in the form of right inputs being supplied to invalid usage during the operations. Simple example is, reusable RTL FIFO block having checks on popping empty FIFO (underflow) and pushing on full FIFO (overflow).

End of test checks

End of test checks are essentially checks to ensure both the DUT and test bench components are in expected state after completion of test execution and there isn’t any unintended behavior detected.

Ideally the test bench components should provide the APIs to call, which implement the end of the test checks. When called it should check from blocks perspective if it’s properties and states are indicating acceptable values.

End of test checks should also check DUT’s FSMs state, data buffers state, status register values etc. for the expected behavior.

Watchdog timer checks

What to do if test never completes? What if, it’s hung waiting for an event that will never take place?

Typically every wait in the test bench should be guarded with the timeout. This timeout value is configured based on the maximum duration within which the event being waited is guaranteed. When it’s not done it can result in test getting hung.

Hung tests have to be terminated. That’s where a watchdog comes into play. A simple watchdog check is a timer configured for timeout value based on the longest test execution time. It runs as independent thread.

When a test does not terminate within the configured timeout value, it causes the forceful termination of the test with an appropriate error message.

Programming checks

There are many well-known defensive programming methods. These are typically part of the best-known programming practices. These could be defined as part of the coding guidelines but mostly inherited by good programmers through their years of practice and commitment to craft of programming.

Some examples could be checking dynamic array sizes before accessing, checking if the randomization is successful, checking the object handle for null before accessing its methods or properties etc.